The balance between security and competition

In recent months a battle has been playing out that has the potential to shape the future of the tech world.

On one side you have those who are trying to curb the power and dominance of Apple and Google and introduce a more competitive landscape. And on the other side you have the two Silicon Valley giants themselves, claiming that such a move would put end users of applications at risk.

As you know, Google Play and Apple App Store are the official markets to publish mobile apps for Android and iOS devices respectively. These are two incredibly convenient distribution channels. Not only because the stores are already installed on the devices, but because they’re fully integrated natively into the OS and they provide lots of tools from marketing through to security controls.

But publishing on these two official stores comes at a cost - literally. They both charge 30% of the application price or in-app purchase transaction as a service fee (with some exemptions during the first year). Almost a third of the app cost is a hefty margin, which helps to explain why developers are exploring the possibility of publishing via third-party stores and distribution channels.

Some companies’ battles to escape the reality of what they see as an extortionate model have made newspaper headlines. We’ve written before, for example, about Epic Games (the developer of the hugely popular Fortnite) railing against Apple and Google’s hegemony.

At the same time, legislation is also emerging - such as the EU’s Digital Markets Act - which piles even more pressure on the tech giants to allow end users to install apps from third-party platforms.

And while some might celebrate this move to encourage increased competition, Apple and Google do have a point when they suggest that it might be at the detriment of security. This is particularly true if we focus on integrity control - the act of ensuring that an application hasn’t been tampered with and remains the same as the one developers handed over at the time of launch.

Publishing apps on third-party platforms would almost certainly lead to a loss of integrity control - at least in the short term.

In this article, we’ll take a look at some of the measures Apple and Google have in place to maintain application integrity. We’ll also explore the protection tools you as a developer would need to equip your app with to keep integrity control if it were to be published on another platform that doesn’t have these measures in place.

What do the two big app stores currently provide?

Secure application delivery

The industry standard of application distribution today is rather complex. Access to the Google Play/App Store management console is protected by a multifactor sign-in experience. Each application you create is required to be digitally signed, with the public certificate being managed through the same console.

These measures make sure that, once created, the new version of the application can only be updated by the same entity that created it, so long as you haven’t published your certificates and password on the Internet.

These days both of the two big stores support a double signature scheme: one which is used to upload an app to the store, and another to sign the app itself. This scheme mitigates the risk of losing the original certificate and thus being unable to update the app - something which used to happen quite often.

The next step is a secure delivery system to the end user’s device, which happens via a protected communication channel. Also, the device itself checks the application signature against a previous signature and a version created in the store. Something that is especially common with iOS applications, where the signature can expire or be revoked. This multi-layered approach to secure application delivery and updates is the industry standard. And it has taken years of effort to reach this point.

Device and app attestation

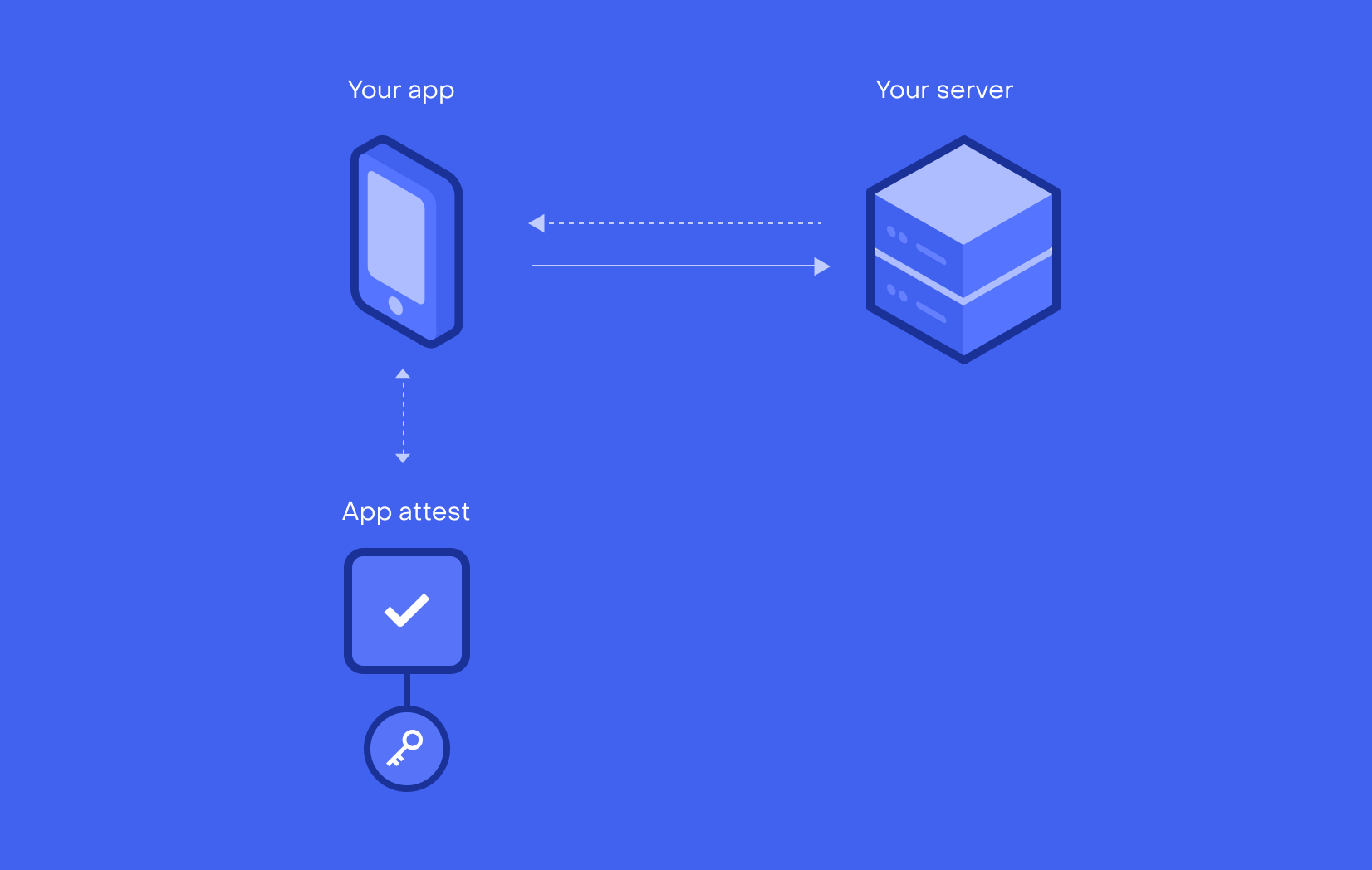

It’s important to remember that applications run on devices which are fully controlled by their owners. And this can be such a risky environment at times that you’d be forgiven for wondering whether it might be better not to operate there at all. Thus there needs to be a mechanism to assess the device your app is running on and to determine whether it’s safe.

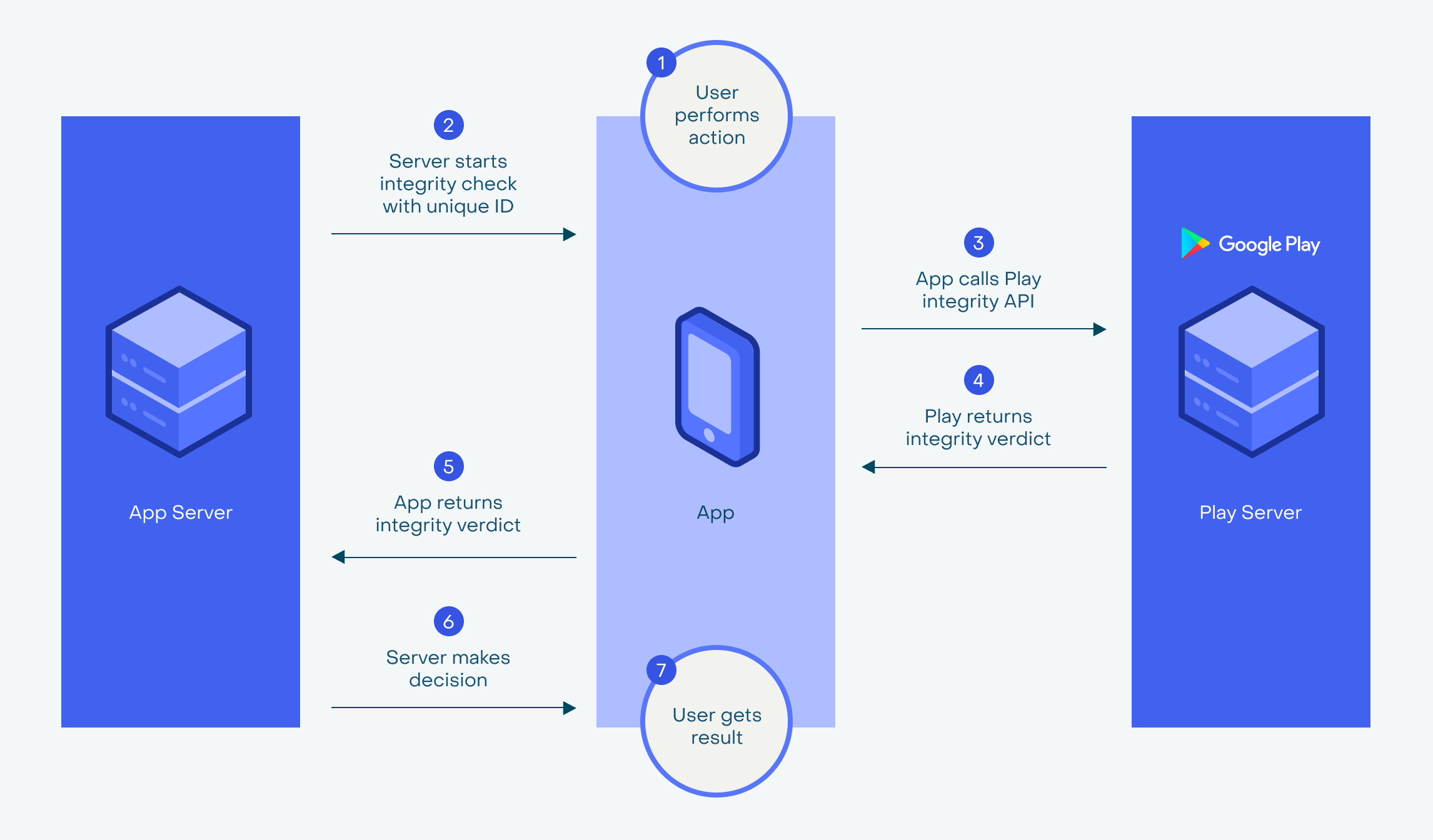

Both stores (Apple and Google) provide APIs that do just that. Google Play has SafetyNet API, which was recently complemented with Play Integrity API to make an application integrity verification for critical actions. iOS offers the App Attestation Service to make sure the device is safe and hasn’t been jailbroken. It’s also able to verify that the application itself wasn’t tampered with using cryptographic tokens. These APIs are an integral part of the two respected ecosystems.

Application monitoring

Visibility is a critical aspect of any solution. Application developers expect to be able to monitor their applications. But this is a more difficult task compared to server components because you don’t control the device itself. To monitor the application in terms of performance, memory consumption and general health, additional steps have to be taken.

And application stores help with that in various ways. You can see the distribution of your user's device's OS versions, the fatal errors of your app, and the number of app crashes, for example.

An integrity control checklist for third-party publishing

So we’ve explored how the Google Play Store and the App Store help to maintain app integrity. Away from this in-built protection, though, applications must fight threats on their own.

It’s vital that developers understand this. Because while there may be some justifiable resentment at the cost Apple and Google charge, as the paragraphs above set out, there is a protection payoff benefit that comes with that fee.

If you’re considering shifting to third party publishing platforms for your app, it’s important to consider the threats it might be up against. Clearly threat models differ considerably for applications with a variety of purposes. The risks an app game faces might be very different to a banking application, for example. But determining this threat model is a crucial first step for you to know which steps to take to protect your app.

Some of these steps - from the development, delivery and operation perspective - are listed below.

Reliable and secure delivery

As we learned earlier, we as developers want to make sure the application we publish reaches the user’s device intact and untouched. To do so we first need to cryptographically sign the package using the same keystore. This file should be stored safely: ideally so that only a delivery pipeline can access it. It should also be properly backed up. And the transmission should happen over a secured communication channel - both from the development infrastructure to a distribution service and from a service to an end device.

The security controls include encrypted traffic, client and server certificates and certificate rotation. The delivery channel should also be reliable and support upload/download resume, checksum verification, and monitoring upload status. The access to the upload mechanism should also be protected by authentications like login/password pairs (as Apple does), a token (like MS App Center), or a certificate (as per Google Play).

Cryptographically-ensured application integrity

Once the application is installed on the device, we want to clarify the integrity of the package in case it was altered by agents on the device. The first step to do so is calculating a checksum. However while the checksum can verify the integrity, the authenticity is another matter entirely. If the app transfer were intercepted, likely the checksum would be replaced and the hashing function could easily be guessed.

A more sophisticated approach is Key-Hashed Message Authentication, where a common private key is used as part of the hashing algorithm. The advantage gained here is that such a hash cannot be recreated without knowing the private key which only resides on the application server. So, if the application were compromised it would be impossible to create a proper hash for it.

Application-level mechanism of device and self-assessment

Another threat you might want to protect against is running the app on a compromised device. Once the device is rooted or jailbroken there are indirect signs it has happened. It’s possible, for example, to call su, have a /data/data folder readable, have a superuser.apk somewhere on the filesystem, and so on.

For iOS, such checks include searching for Cydia app installation or having a "cydia" scheme available. Those heuristics are not permanent and vary from version to version of the OS and from one jailbreak to another. You might want to implement those checks as well.

Also, in the case of popular applications with hundreds of thousands of users (or even millions of them), another problem arises when the application is used by a bot for spam purposes, multi-account registration, and so on. Some tactics are purely server-side, like a limitation of particular requests per second, per device. But the application itself can try to fingerprint the user behaviour and make a guess if it is used by the automated script.

Server-side application attestation

Applications and games can include paid functionalities. And once the transaction starts, we want to make sure it is not a fraudulent one. Verifying the application binary, the installation from the trusted source, and the device integrity are common measures to do that.

Most of it requires the participation of the server side. The way it might work is by exchanging an encrypted message between an application server, installation source and the app itself. If the application was installed from an untrusted location or has been tampered with, then the check should not pass. This is the mechanism Google Play Integrity API uses and this is what you might want to leverage as well if your app were published on a third-party platform.

Integrity monitoring

Let’s assume the application successfully reaches the user’s device with its integrity verified, and the safety of the device confirmed. There’s nothing to say it will stay this way forever. The user might catch a trojan, obtain root privileges on the device, or intentionally try to break the application integrity. A user could even try to access the memory dump of the application to search for runtime secrets.

As a developer, you would want to know if any of these incidents had happened. It’s especially important to detect the attempts to compromise the application integrity and report them to the application server. Having such monitoring will allow blocking access to sensitive features, mark the user as a potential fraudster, or just save the information for future use.

Don’t compromise your app’s integrity

As we’ve highlighted in this article, the implementation of proper integrity control is not an easy task. What’s more, integrity control doesn’t only mean securing app distribution and app/device attestation. The next major component of integrity control is the protection of application runtime memory and defending against malicious code injection.

The number of mobile security incidents around the world right now shows us that there are no completely reliable, secure solutions from mobile platform vendors themselves. In most cases, developers need to implement solutions from commercially-available protection products.

Mobile applications are valuable - often critical - assets for modern businesses. When considering publishing your app outside the big two official app stores, it’s worth considering the tools you’ll lose access to on the two big platforms.

If you do decide to publish on a third-party platform, it’s vital that you equip your application with protection mechanisms that maintain its integrity.

Whether you secure your app yourself or you look for a solution from a mobile app protection vendor, be sure to focus on integrity control. Check that the vendor places a high value on application integrity and that they offer the protection measures we’ve set out in the checklist in this article. Then you’ll be sure that any potential gaps have been covered.